We are planning some software upgrades to our main website and as in previous iterations I started to write some tests. Just some basic stuff to make so that the config that we have set up does what we think it should do. I am not testing the applications or content yet but hopefully some of what we have learnt can help others to do that.

In the past I have written some unit tests in python that where simple and ran from the command line. This time we thought that it would be good to make these test slightly more permanent.

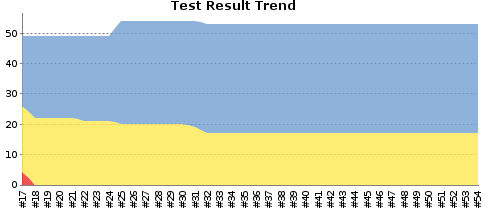

So I picked some tools as detailed below and we set about working out a set of tests. For a start we have just trawled through the long and historical Apache config picking out bits that look important and just writing a feature header. Now we are going back and putting in the details of all the tests. This is a great start and it's almost fun to see the graph of successful tests go slowly blue. (Jenkins seems to like blue not green for test results but there is a plugin to change that if you want. There is a plugin for everything!)

The aim of creating these test is obviously to give more confidence when we move over to the new server but I also hope that we can move to a different way of managing configuration changes. Moving to a state where we write the test first and then the config should be a much more relaxed situation. There is also the ability to raise alerts into Nagios if we require it. So if we see some security tests fail we could raise a Nagios alert and quickly take action.

Writing tests has also forced us to go back through the old configs and ask some questions about what each section is trying to achieve. I have already removed one section that is no longer relevant and have earmarked a couple more for after the upgrade.

We are still adding tests to the features that we defined in the first run through and that will give us a good base to be going on with but I am sure that there are more that we can add that are not directly related to the Apache config. Testing all the redirect rules is a massive job and we will need to automate it at least for the initial set up. When we have something that is useful I would like to hand the management over to the web authors for the most part.

Next up is to run puppet-lint over the puppet config from jenkins and shame us all into fixing our style and then to start writing some puppet-cucumber test! I already have it sending test results to our IM server for maximum annoyance"

Tools

I will go through some of the technical bits of the tools for those that are not familiar with them but there is better information on their respective websites.

Cucumber

Cucumber is a BDD[Build Driven Development] tool that allows you to write feature definitions in English and then parse them to create real test. You right out a feature and then some scenarios that would test a feature in a form of English, or your local language, called Gherkin. The resulting file looks a bit like this

Feature: The web server should have a standard set of error pages

Scenario: Requesting a non-existent page should give the code 404 and the default page

When I visit /notarealpage.html

Then I should get a response code 404

And the page should contain the content "University of York"

The first word of each of those lines is a keyword. Scenario, When, Then, And are all used by Gherkin to know how to interpret the line. You then write some ruby code that can deal with each of the lines. So for the When line you may write.

When /I visit (.*)/ |page| @url = "http://#{@host}/#{page}" @response = fetchpage(@url) end Then /I should get a response code (\d+)/ |responsecode| @response.code.should == responsecode end

Cucumber adds some extra keywords to work with gherkin templates but basically it's just ruby. The first part builds a full url using the '@host' variable that we have set previously in the environment and the page that comes from Gerkin line. The second part matches the response code check and use RSpec's should feature to test it. I am assuming that we have already defined the 'fetchpage' function.

As you can probably see now I can write easily read tests in English for all my error pages without having to add any more code.

These are some really basic examples and I would advise you to go and read the site at http://cuckes.info/ to get a real feel for the full features of cucumber.

Jenkins

Jenkins is a fork of Hudson. Hudson is a fork of Jenkins. They are both a Continuous Integration(CI) servers and separated by politics. Jenkins seems to be the most active and supported. CI is a workflow that means as code is checked into a repository, it is built and tested automatically and the reports, from that code change, are alerted on and stored. I am just using it for the nice web gui and its support for running test regularly and interpreting the results. It has plugins for supporting all types of test and builds from Maven and ant scripts to test coverage, parser warnings and lint packages like jslint or puppet-lint. Again go and read more at http://jenkins-ci.org/. Running cucumber with the command '''cucumber -format junit --out logs/''' will create a load of junit format xml files that jenkins can understand and show you pretty graphs, and details of errors.